Docker Overview

Docker is software designed to containerize applications. To wrap an application in a container is to package your application into a small, lightweight environment that can be run on any machine.

Docker vs. Virtual Machines

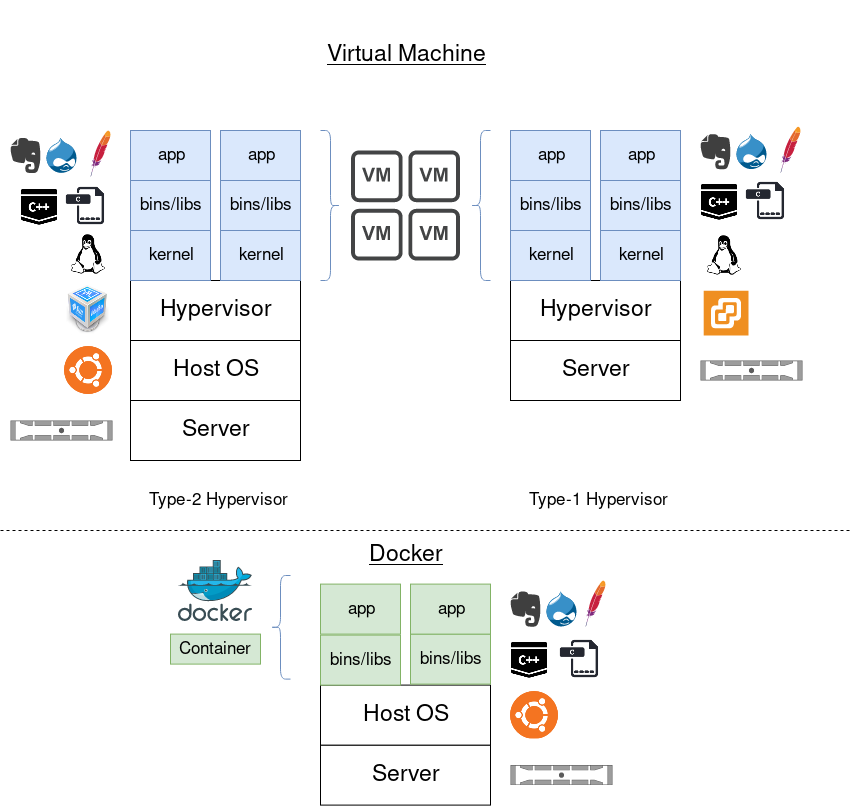

Docker is often confused with full-blown virtual machines, but this is not the case. The major difference is that Docker containers are rendered under the host operating system (i.e., Windows, RHEL, Debian, OSX, etc.) while virtual machines are rendered under a hypervisor (Type 1: Virtualbox, VMware Player, Type 2: ESXi, Hyper-V).

Each virtual machine must have its own emulated hardware, courtesy of the hypervisor, and its own full-blown operating system installed. This creates a lot of unnecessary overhead when you only want to run a single application on it. Docker containers do not require their own emulated hardware, or even an operating system. They only require a miniscule portion of the file system and compute power of the host operating system, which is handled by the Docker engine. This is why Docker containers are considered faster and more lightweight than full-blown virtual machines.

What Problems Does Docker Solve?

Works-on-my-Machine Syndrome

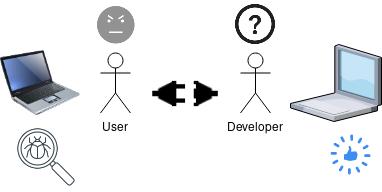

The works on my machine syndrome has always plagued the software development industry. Developers write and test their application in a specific corporate development/test network, or on their own personal machine. The users of the application, however, run the software on their own machine or environment.

This creates a major challenge in portability and troubleshooting, in that developers often have to troubleshoot application issues that occur on the user’s machine/environment, but not in the developer’s machine/environment. The issue cannot be reproduced by the developer effectively - making the issue more difficult to solve.

Docker fixes this issue by providing a packaged environment along with the application. As long as they are using the same Docker image, the environment is identical. Issues can then be more easily reproduced.

Portability

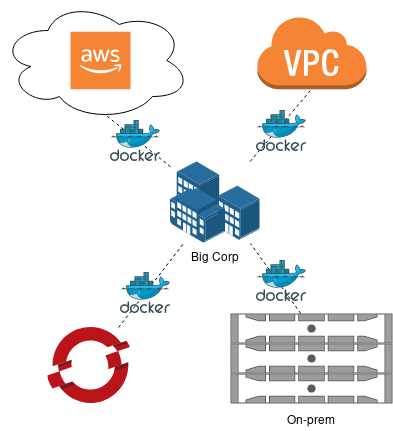

In this ever-changing infrastructure age, many companies are adopting multicloud strategies. This allows them to host applications, server infrastructure, or storage across multiple different cloud platforms - preventing the reliance on one vendor or service availability, among other benefits. In addition, many companies still have infrastructure on-prem that vastly differs from the systems hosted via cloud providers.

Docker, with its packaged environment, allows applications to be run seamlessly on any infrastructure, whether it be AWS, Azure, or on-prem. Companies can opt to retract their usage of a cloud provider, and not worry about modifying their application to work properly on the new cloud provider platform. The application will run in a container, and that container can be run anywhere.

Dependencies

Use of shared libraries within modern software is very prevalent - this allows applications to be developed faster by avoiding unnecessary code rewriting, but at the same time, creates a dependency challenge for users. Users not only must install your application, but they need to install the software it depends on in order for the original application to properly function. And that dependency software may have its own dependencies, and so on and so forth.

We’ve all been in dependency hell, and this is no more enjoyable for end users trying to use your application. Docker resolves this issue in its ability to package all application dependencies within the container itself. The user need only use the correct Docker container, and the application, all dependencies, and their correct versions will be held therein.

Scalability

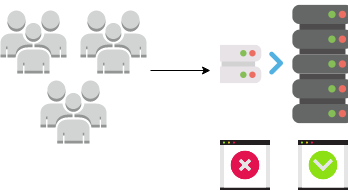

Modern companies are often rapidly growing as well, and must adapt their infrastructure with similar speed to match the increased traffic/usage volume. Traditionally, this involved manual intervention - a team would have to install the application on a new machine, install the dependencies, perform testing, then make the new server available to users. This strategy is no longer sufficient for the performance requirements of today.

Products known as container orchestration tools can help combat this issue. These tools allow management/clustering of multiple containers spread across one or more host operating systems. A simple example of one of these products is Docker Swarm - an orchestration tool native to Docker itself. This tool allows you to manage multiple Docker containers run on multiple hosts in a simple manner, but is lacking in some enterprise features. The more enterprise-focused orchestration tool is Kubernetes - similar to the functionality of Docker Swarm, but with more features and thus, added complexity.

Orchestrators not only allow you to manage several containers across multiple hosts, but allow scalability in these containers. With a simple command line, you can increase the amount of replicas your application has by X amount, depending on available resources. Since most containers start up in seconds, they scan scale very quickly. More robust orchestrators, like Kubernetes, has the ability to auto-scale your container clusters depending on utilization and workload.

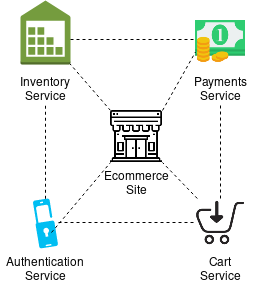

Microservices

The industry is also moving more towards microservice architecture. This architecture promotes structuring an application into several individually operating services that combine into one overarching application. This allows easier management and testing for complex applications, while granting the flexibility to refactor one component’s code without altering another.

Docker aids in the deployment of microservices, as each microservice can be entirely contained within a container, and that container can be easily deployed or destroyed. The container can be version controlled like source code, and easily changed/rolled back without affecting the availability of other microservices.

Terminology

The Docker daemon

The Docker daemon (dockerd) is a background process that handles the creation and management of Docker images, containers, and services. The Docker client (docker) often communicates with the daemon over the native RESTful API.

The Docker client

The Docker client (docker) is the command line interface that interacts with the Docker daemon.

Images

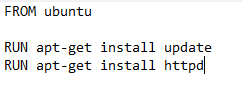

An image is essentially instructions on how to craft a specific Docker container. These instructions are contained within a Dockerfile. The Dockerfile contains a list of steps to perform in sequential order. At the end of the steps, the result will be a custom Docker container.

These steps can vary in complexity, but can be as simple as below:

This Dockerfile simply builds from the latest Ubuntu image, installs the latest updates using apt-get, and installs the Apache web server.

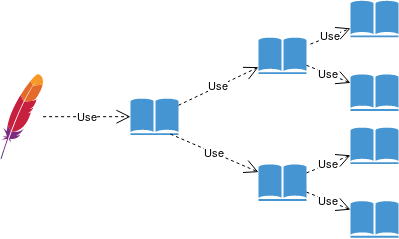

One thing of note is the first line of the Dockerfile - FROM ubuntu. Images can be layered on top of eachother, in that images can be built from other images. In my example, I simply needed a web server, so I just leveraged an existing image (Ubuntu) and installed the web server on top of that. This is much more efficient than trying to craft an operating system image from scratch, just for the single ability to run a web server.

Container

A container is a running instance of a Docker image. The steps performed in a Dockerfile will result in a running container. The container can be started, stopped, destroyed, moved, etc. - all handled by the Docker daemon.

You send these commands to the Docker daemon by using the Docker client (docker).

Registry

Registries store Docker images. These can be privately hosted, or available to the public.

You may be wondering where Docker pulled that Ubuntu image from in my previous Dockerfile example. By default, Docker is configured to obtain images from the standard public repository on the Internet - Docker Hub. Anyone can use Docker Hub, but vendors and FOSS maintainers often have official images available. Try to use these, as these are tried and tested by the author and thousands/millions of users. My example uses the official Ubuntu image provided by Canonical on Docker Hub.

What next?

Reference the official Docker documentation for more info, or proceed on to getting Docker up and running.